Video Technology 101

January 20, 2025 · 21 min read · Page View:

Pixel which is the combination of `picture` and `element`

If you have any questions, feel free to comment below. And if you think it's helpful to you, just click on the ads which can support this site. Thanks!

Do you really understand the video technology? What is the frame rate, resolution, bit rate and their relationships? Do you know the parameters p and K in the terminology 1080p and 4K? Do you know how to describe the video quality? What is the Blu-ray Disc on earth? What the difference between the codec such as H.264/AVC, H.265/HEVC, AV1? You may notice the Apple ProRes in apple, so do you really understand what it for? Do you know the HDR and Dolby Vision? So why there are so many tailers such as .mp4, .mkv, .flv, etc?

Now this Video Technology 101 will introduce them to you.

At the beginning, you should know the Gib(Gibibyte, 1Gib = 2^30 Bytes)and GB(Gigabyte, 1GB = 10^9 Bytes)is not the same thing.

GBis normal used for the storage device capacity such as hard disk, SSD, and the software package size.Gibis normal used for the RAM, file system capacity and other storage that need to be represented in binary.

Bandwidth #

Generally, the unit of bandwidth is bps (or b/s), which means bits per second, indicating how many bits of information are transmitted per second. It is the abbreviation of bit per second. Normally, the bandwidth provided by the ISP is Mbps, so the true bandwidth you need to divided 8 convert to MBps which is usually used. The bandwidth is mainly focus on the transmission field.

Frame #

We often call the number of frames in 1 second as frame rate, which is usually represented by fps (Frames Per Second). So in the video field, the frame rate is normally 24fps、25fps、29.97fps and 30fps. Nowdays, 99 percent of films are still using 24fps.

The refresh rate of the screen #

The refresh rate of the screen is the number of times the screen is refreshed per second. It is usually represented by Hz (Hertz). So in the video field, the refresh rate of the screen is normally 60Hz、75Hz、120Hz and 144Hz. If the frame rate is higher than the refresh rate, the redundant frames will be discarded.

So the frame rate of the phone is 30fps, the frame rate of the TV is 25/30 (commonly used), 50/60fps (motion lens), and the frame rate of the high-speed camera is 120/240fps (slow lens).

They are different concepts, the frame rate is focus on the video, but the refresh rate is focus on the device. Even your device has 120 Hz, but the playing video is 24fps, the display is still 24fps.

This reminds me of my childhood experience, when I was a child, I always played the CF game, every time the display didn’t fit, I would try to close the vertical synchronization technology to improve the smoothness. The vertical synchronization technology is used to make the display follows the video same frame rate.

Resolution #

The resolution of a video/screen is the number of pixels in the horizontal and vertical directions.

P or i? #

eg. If the resolution of a video is 1920x1080, it means that the video has 1920 pixels in the horizontal direction and 1080 pixels in the vertical direction(In the 16:9 aspect ratio, 1080/9*16=1920). So it total has 1920 * 1080 = 2073600 pixels, whose resolution is 2073600.

And the traditional we call this resolution as

1080p, which means the vertical resolution is 1080 and thepmeans progressive scan.

The difference between progressive scan(1080p) and interlaced scan(1080i):

- Interlaced scan: Each frame contains half of the lines making up the entire image: the first containing only the odd lines, and the second frame containing only the even lines. The two frames will be displayed in alternating modes, allowing the human brain to reconstruct the entire image into one without realizing that it’s made up of two frames. That really save the bandwidth, which means if the fps is 60, actually the interlaced scan only creates a total of 30 full frames. The monitor only displays half of what it recorded by the camera itself.

- Progressive scan: The pixels are displayed in a single frame.

While the human eye can’t usually detect the

1080itechnology,1080phas it clear advantages when it comes to scenes with lots of motion. In this case, an object in fast motion can have moved from the first field to the second, creating an almost blurry or glitchy image for the viewer.

Compare the 1080i and 720p source: https://youtu.be/Avvh0iH2xSg

Compare the 1080i and 720p source: https://youtu.be/Avvh0iH2xSgRefer to the industry standard:

- 480p SD(Standard Definition)

- 720p HD(High Definition)

- 1080p HD or FHD (Full High Definition)

- 2160p 4k UHD(Ultra High Definition)

- 4320p 8k UHD

K #

Now we can talk about the K, differ from the P and i in the vertical direction, the K is used to describe the number of horizontal pixel lines in the cinema level. In this case, you can just call the 1920x1080 as 1.9K or 2K. The 3840x2160 is the 4K of TV standard(Actually name is UHD: Ultra High Definition), and the 4096×2160 is also the 4K in the cinema.

Normally, there is not the fixed resolution of

4Kor2K, it just about the horizontal pixels lines.

Aspect Ratio #

The aspect ratio of a video is the ratio of the width to the height. The normal is 16:9 or 4:3. For the resolution below 480p(SD), the aspect ratio normally is 4:3. The resolution is (480 / 3 * 4 = 640 ) 640x480.

Bit Rate / Data Rate #

It is also called the data rate, which is more focus on the data compared to the bit rate on the binary data.

The size of data produced by the encoder per second, in kbps. For example, 100kbps means that the encoder generates 100kb of data per second. Most of the time, we don’t use the data size to describe it because every video duration is different. So we use the data rate which means the size of data produced by the encoder per second or the size of data in this video per second(1 Mbps = 1000 kbps).

We can just estimate the data rate of a 2-hours film whose size is 35G and its bit rate is 35×1024×1024×8/2/3600=40777kbps=41Mbps. The bit rate is higher, the video image will be more detailed.

The data rate can be also categorized into Variable Bit-Rate (VBR) and Constant Bit-Rate (CBR).

- VBR: The data rate is not fixed, it will change according to the content of the video. Often used in the video website.

- CBR: The data rate is fixed, the common scenario is the streaming or TV broadcast.

There is a question: A video file size is 10 M, the playback duration is 2 minutes 21 seconds, a 10 Mbps network can shared by how many people? Answer is in the end.

Relationship between frame rate, resolution, and bit rate #

How to describe the video quality #

The image quality is usually described by PSNR (Peak signal-to-noise ratio), which means the ratio of the maximum signal to the noise. The image compressed the PSNR near 50dB means the image is very clear, and keep beyond 35 dB is acceptable.

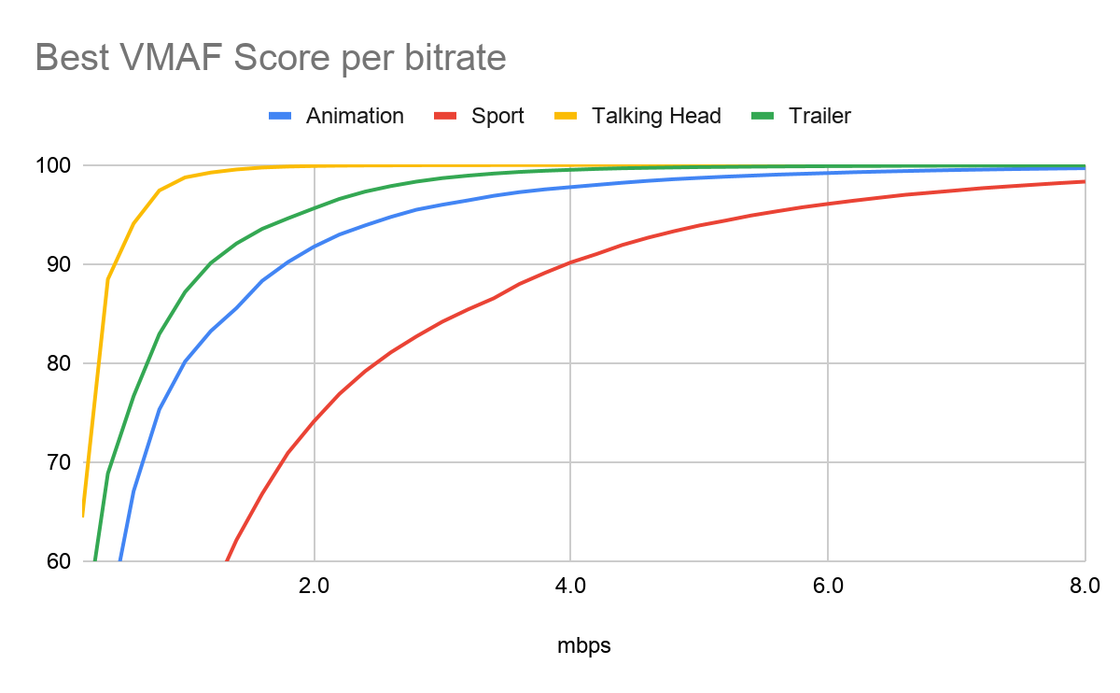

For the video quality, we can use the VMAF (Video Multi-Method Assessment Fusion) from netflix to describe it.

Understand the relationship #

Different kinds of video requires different data rate. eg, the animation video has many blocks color, so it requires less than sport.

So when we center around the data rate:

When the data rate is flexible, if the frame rate is higher, the frames in every second will be more, so the encoder will need more data to encode the frames. The video size will be larger.

When the data rate is fixed, if the resolution is higher, the number of pixels will be more, but the encoder generates data is fixed, so the video will be more blurry. If the resolution is lower, the video will be more clear.

You can just refer to the netflix’s tech blog:

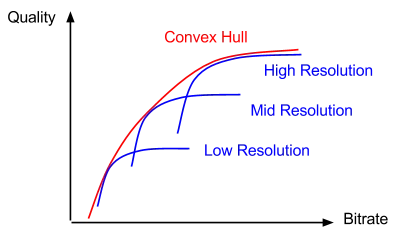

At each resolution, the quality of the encode monotonically increases with the bitrate, but the curve starts flattening out (eg A and B) when the bitrate goes above some threshold. This is because every resolution has an upper limit in the perceptual quality it can produce. When a video gets downsampled to a low resolution for encoding and later upsampled to full resolution for display, its high frequency components get lost in the process.

On the other hand, a high-resolution encode may produce a quality lower than the one produced by encoding at the same bitrate but at a lower resolution (see C and D). This is because encoding more pixels with lower precision can produce a worse picture than encoding less pixels at higher precision combined with upsampling and interpolation. Furthermore, at very low bitrates the encoding overhead associated with every fixed-size coding block starts to dominate in the bitrate consumption, leaving very few bits for encoding the actual signal. Encoding at high resolution at insufficient bitrate would produce encoding artifacts such as blocking, ringing and contouring. Encoding artifacts are typically more annoying and visible than blurring introduced by downscaling (before the encode) then upsampling at the member’s device.

From netflix’s tech blog

If we collect all these regions from all the resolutions available, they collectively form a boundary called convex hull. Ideally, we want to operate exactly at the convex hull, but due to practical constraints, we would like to select bitrate-resolution pairs that are as close to the convex hull as possible.

So on the video website, if your device does not support higher resolution, but you have the higher bandwidth, the video website will offer you more higher bit rate video to improve your experience.

Blu-ray Disc #

As we can see in many video website, there is always a option named Blu-ray Disc. In fact, it means uses the blue laser to read and write, it is just a kind of storage, not a format of video resolution. Which is the successor of the DVD(720×480) and VCD(320×240).

In 2015, the Blu-ray Disc Association launched Ultra HD Blu-ray, with a capacity of up to 100GB and support for 4K UHD video at a resolution of 3840×2160 via H.265/HEVC.

And in 2016, the Blu-ray Disc Association launched Ultra HD Blu-ray standard, which requires the HDR10, and can set the Dolby Vision as the optional.

Encode is efficient #

But if a video has a high resolution and frame rate and data rate, the video size will be very large. When you play it via internet, which is very waste of bandwidth. At this time, you will need to compress the video through some encode methods.

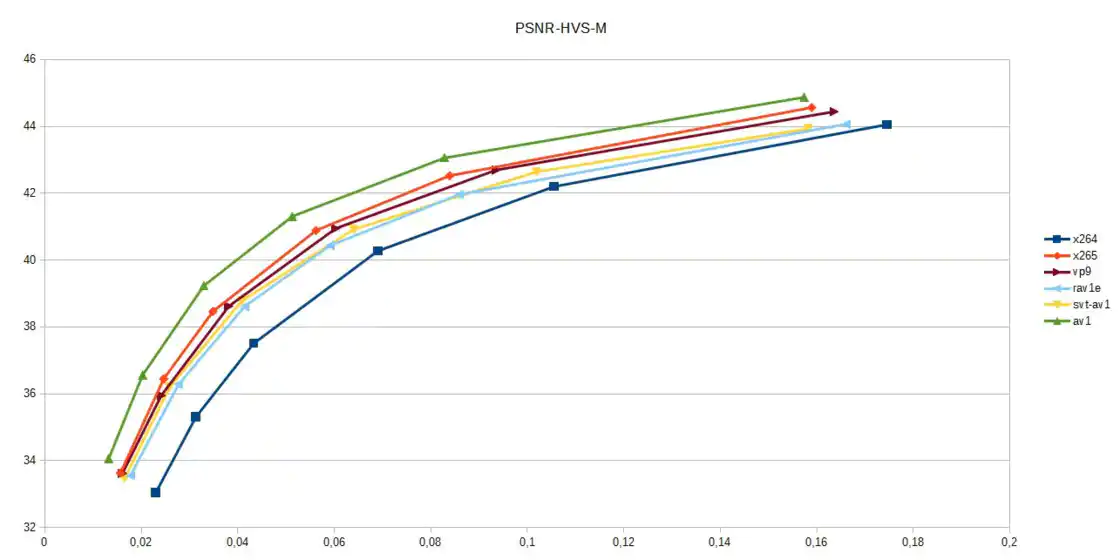

The x axis is data rate

The x axis is data rateIf you use different encode method, you will get a different data rate. But the cost is compression and poor compatibility.

So what is the codec? #

Nowadays, we can shot the image with the camera, and then they are encoded via encoder and saved in your device, finally you can play it via the decoder, through the decoder, the compressed video data is output as non-compressed color data, such as YUV420P, RGB, etc.

The codec is the abbreviation of the encoder and decoder.

And the common used tailers .mp4, .mkv, .flv have nothing to do with the encode. You can just treat them as the container. Every container has a lot of different encoded of video, audio, subtitle and other data.

H.264/AVC #

H.264 is the most common codec for video compression, it was released in 2003 by MPEG (Moving Picture Experts Group). It is the successor of the MPEG-2. It has a lot of different names, such as:

- H.264

- AVC(Advanced Video Coding)

- MPEG-4 Part 10

H.265/HEVC #

- H.265

- HEVC(High Efficiency Video Coding)

- MPEG-H Part 2

H.265 is the successor of the H.264. It is the most common codec for video compression. The main advantage is that it can compress the video more efficiently, which means the same quality video can be compressed to a smaller size as well as save the 50% of the bit rate at the same quality of video. Besides, the best part of H.265 is that it can support the resolution beyond 4K, eg 8K. eg, the Dolby Vision is almost codec in H.265.

But it needs the patent fee.

H.266/VVC #

It was released in 2020, the compression efficiency of H.266/VVC is 50% higher than H.265/HEVC.

VP8/9 #

They are created by Google and free of charge.

AV1 #

The AV1(AOMedia Video 1) is released in 2018 by Alliance for Open Media which is composed of Apple、Amazon、Cisco、Google、Intel、Microsoft、Mozilla and Netflix, and it can be more efficient about 20% than VP9.

Apple ProRes #

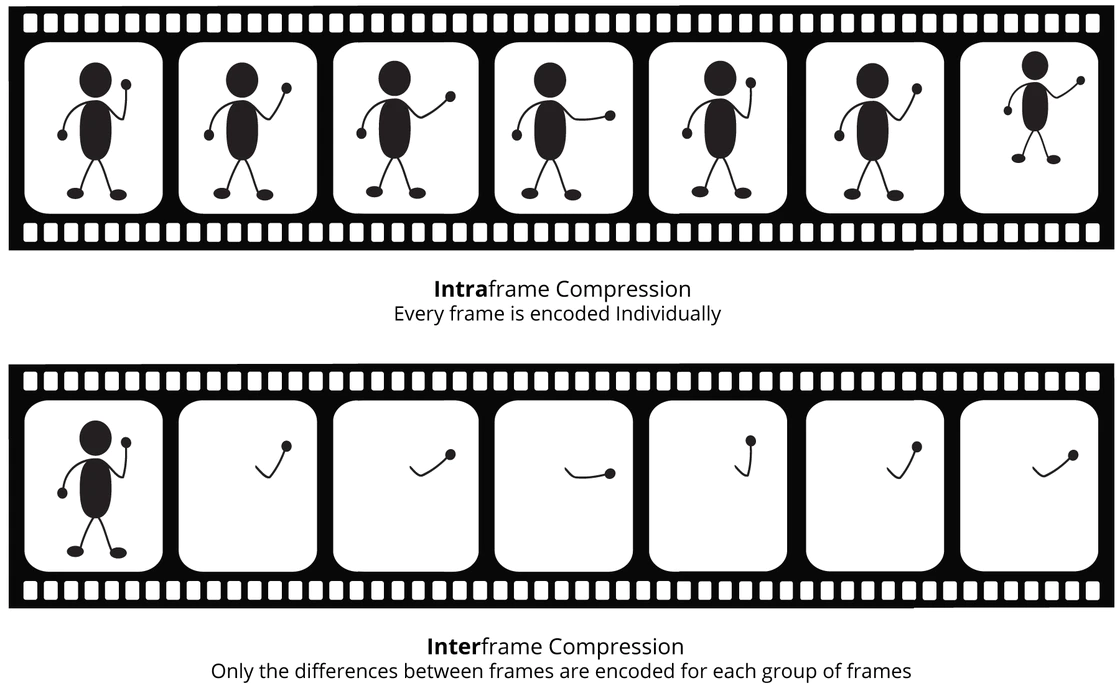

Different from the codec before, Apple ProRes is the Intra-compression, not the Inter-compression.

As we can see, the intra-compression will save a lot of frame, which is larger than the inter-compression. But it has a lot of advantages:

- You don’t need to compute other frames, so it has lower requirements for the device.

- You can do more editing on the video.

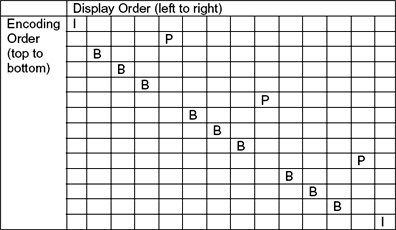

For better understanding, you should know the

IPBframes in inter-compression. In the video, every frame is a picture. But when saving them, we should consider compressing them.

- I: Intra-coded frame, which means the frame is independent, only use the current frame to encode.

- P: Predictive-coded frame, which means the frame is used the previous I or P frame to predict the current frame.

- B: Bi-directional predictive-coded frame, which means the frame is used the previous I or P frame and next P frame to predict the current frame.

In the video encoding sequence, GOP (Group of picture) refers to the distance between two I frames, and Reference (Reference period) refers to the distance between two P frames. The number of bytes occupied by an I frame is greater than that of a P frame, and the number of bytes occupied by a P frame is greater than that of a B frame.

So in the same bit rate, the larger the GOP value, the more P and B frames, the more bytes occupied by each I, P, and B frames, and the better the image quality. The larger the Reference value, the more B frames, the same is true.

In a GOP, the largest frame is the I frame, so relatively speaking, the larger the gop_size is, the better the image quality will be, but in the decoding end, it must start from the first I frame received to correctly decode the original image, otherwise it will not be able to decode correctly. In the technique of improving video quality, there is also a technique of using more B frames. Generally, the compression rate of I is 7 (similar to JPG), P is 20, and B can reach 50. It can be seen that using B frames can save a lot of space, and the saved space can be used to save more I frames, so that better image quality can be provided at the same bit rate. So we should set the size of gop_size according to different business scenarios to get better video quality.

Normally, there is 1 P frame after the I frame, and 2~3 B frames between the I and P frames, and 2~3 B frames between the two P frames. B frames transmit the difference information between it and the I or P frame, or the difference information between the P frame and the subsequent P frame or I frame, or the difference information between it and the front and rear I, P frames or P, P frames average value. When the main content changes more, the frame value between two I frames is smaller; when the main content changes less, the interval of I frames can be set larger.

So the order of encoding and display is not the same:

The upper is encoding order, the lower is display order

Color Depth #

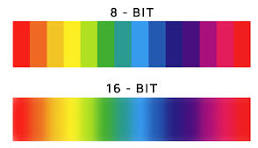

The color depth is the number of bits used to represent the color of a pixel. In the RGB, the common color depth is 8-bit, 10-bit, 12-bit.

In the 8-bit color depth, the color is represented by 256 levels, which means the color is represented by 256 different shades of gray. So the total number of colors is 256^3 = 16777216.

Besides, the engineers find that the human eyes are sensitive to the brightness, it is not necessary to store all the color signals. We can allocate more bandwidth to the black-white signal (referred to as “brightness”) and allocate less bandwidth to the color signal (referred to as “chroma”). So, there is YUV. The Y means Brightness Luma, UV means Chroma.

For more info about images, I will introduce them in the article Image Technology 101.

HDR #

The HDR is an emerging concept in the video field. It is the abbreviation of High Dynamic Range. Compared with the SDR(Standard Dynamic Range), the HDR can display more color ranges and lightness.

HDR also has a lot of different standards, such as:

- HDR10

- HLG

- HDR10+

- Dolby Vision

HDR10 #

HDR10 is an open standard announced by the American Consumer Technology Association in 2015. It does not require any copyright fees, and the “10” comes from the 10-bit color depth. In addition to the color depth, HDR10 recommends using a wide color gamut Rec.2020, PQ (SMTPE ST2084) absolute display, and static data processing. It can support the 1000 nit.

The HDR10 is mainly designed for the streaming media, which is not suitable for the film.

HLG #

But for a non HDR device such as TV, if it displays the HDR10 video, it will be very dark. The maximum brightness is 300 nit, so the HLG is designed for this. The NHK and BBC are the main supporters of the HLG, which can let the device display the 1000 nit differentiatedly.

Dolby Vision #

The Dolby Vision is created by the Dolby lab, it uses the 12-bit color depth, and the color gamut is Rec.2020. It can support the 10000 nit.

Besides, the dynamic metadata is also supported, which can adjust the color and brightness partially, emphasizing the contrast progressively.

HDR10+ #

HDR10+ is created by Samsung, which is also use the dynamic metadata.

Video Format #

Note: the video format is different from the video codec.

Video format is the way to package the video data, audio data, subtitle data, etc. into a file. The file format is the video format, if a video file is packaged in a certain format, then its file suffix name will usually reflect it. The normal video format is:

- AVI (.avi)

- ASF (.asf)

- WMV (.wmv)

- QuickTime (.mov)

- MPEG (.mpg / .mpeg)

- MP4 (.mp4)

- m2ts (.m2ts / .mts )

- Matroska (.mkv / .mks / .mka )

- TS

- FLV

AVI #

Audio Video Interleaved, which is nearly a outdated technology, the file structure is divided into header, body and index 3 parts. The image data and sound data are stored in the body, and the index can be used to find the position of the image data and sound data. AVI itself only provides this framework, the internal image data and sound data format can be arbitrary encoding form. Because the index is placed at the end of the file, it is not possible to play the network media. For example, if you download the AVI file from the network, if it is not downloaded completely, it is difficult to play normally.

FLV #

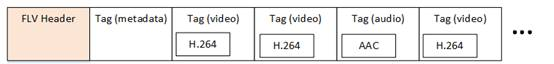

FLV is an Adobe-released packaging format that can be used for both live streaming and on-demand scenarios. Its packaging format is extremely simple, with each FLVTAG being an independent entity.

The FLV packaging format consists of a file header (9 bytes) and many tags (FLV body). Each tag contains audio and video data, and each tag has a preTagSize field that indicates the size of the previous tag. The structure of FLV is shown below.

And the tags can be divided into 3 types:

- Video: Video Tag

- Audio: Audio Tag

- Script: Script Tag(Metadata Tag)

A normal FLV file consists of a header, a script tag, and several video and audio tags.

And the header is composed of 9 bytes, the first 3 bytes are the file type, always “FLV”, which is (0x46 F 0x4C L 0x56 V). The 4th byte is the version number. The 5th byte is the stream information, the last bit if is 0x01 means video exists, the last bit is 0x04 means audio exists, 0x01 | 0x04(0x05) means both video and audio exist, others are 0. The last 4 bytes represent the length of the FLV header 3+1+1+4=9.

M3U8 #

M3U8 is a common streaming media format, mainly in the form of a file list, supporting both live streaming and on-demand.

#EXTM3U // m3u8 header

#EXT-X-VERSION:3

#EXT-X-TARGETDURATION:4

#EXT-X-MEDIA-SEQUENCE:0 # if not EXT-X-ENDLIST the sequences will always be played from the last 3 sequences.

#EXTINF:3.760000,

out0.ts

#EXTINF:1.880000,

out1.ts

#EXTINF:1.760000,

out2.ts

#EXTINF:1.040000,

out3.ts

#EXTINF:1.560000,

out4.ts

FFmpeg has built-in HLS packaging parameters, using the HLS format can perform HLS packaging.

MP4 #

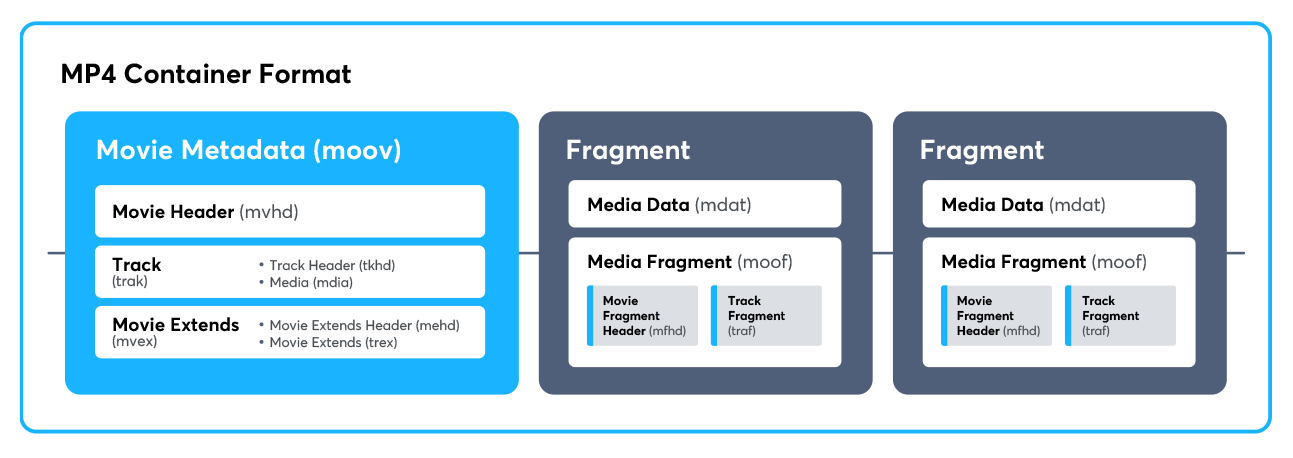

MP4 actually represents MPEG-4 Part 14. It is only the 14th part of the MPEG standard. It is mainly based on the ISO/IEC standard. MP4 mainly aims to achieve fast forward and fast backward, and the effect of downloading while playing. It is the most common video format in the industry.

MP4 file format is a very open container, almost able to describe all media structures. The media description and media data in the MP4 file are separated, and the organization of media data is also very free, not necessarily in chronological order, and media data can be directly referenced from other files. At the same time, MP4 also supports streaming media. MP4 is widely used for packaging h.264 video and AAC audio.

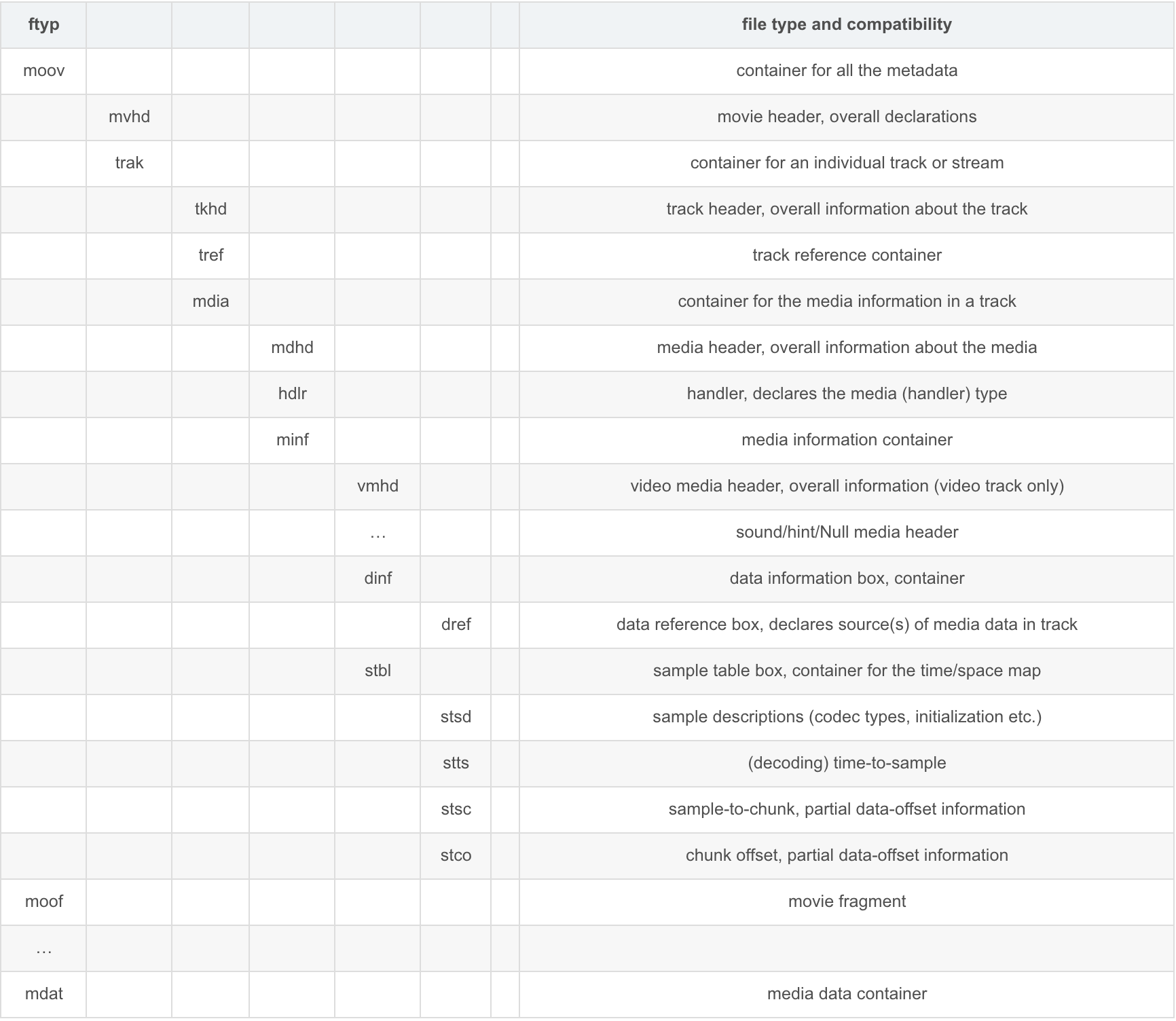

MP4 data is all in the box(atom in QuickTime), which means that the MP4 file is composed of several boxes, each box has a type and length, and can be understood as a data object block.

A box can contain another box, this box is called container box.

- A MP4 file will have a

ftyptype box as the flag of MP4 format and contain some information about the file; - Then there will be a

moovtype box (Movie Box), which is a container box, the sub-box contains the media metadata information.moovin the MP4 file is also unique,moovwill contain 1mvhdand severaltrak.mvhdis the header box, which usually appears as the first sub-box ofmoov.mvhddefines the characteristics of the entire movie, usually containing media-independent information such as playback duration, creation time, etc. - The media data of the MP4 file is contained in the

mdattype box (Midia Data Box), this type of box is also a container box, can have multiple, can also be none (when the media data is all referenced from other files), the media data structure is described by metadata.

The normal MP4 file playback requires that the ftyp and moov boxes are loaded completely, and some frames of the mdat box are downloaded, before it can start playing.

On top of that, the fMP4 can be fragmented, which means that the fMP4 does not need a moov Box to initialize, it can just contains some tracks, the metadata is in the moof box.

TS #

The Transport Stream file is a streaming media format, which is widely used in the live streaming field. It is a container format that can be used for both live streaming and on-demand. The suffix of the TS stream is .ts, .mpg or .mpeg. The HLS protocol is based on the TS format.

The difference between fMP4 and ts #

The ts file does not provide information about the duration, so you cannot perform seek operations on the ts file. fMP4 provides information about the duration, so you can seek to a specific position. MPEG2-TS is a format that requires the video stream to be independently decodable from any segment.

Streaming video format #

We often call the flv, rmvb, mov, asf as the streaming video format. That is because the streaming file can be decoded while it is being transmitted, and it does not need the whole file to start. The characteristics are that there is file header information (this is not necessary) and the middle is packaged, which can be parsed directly by packet, and the file can be of any size, without the need to pass through the index packet. FLV, MPEG, RMVB, etc. can be parsed directly by packet, while MP4, AVI must rely on the index table, and the start must be fixed, if the index table is at the end, it is not possible to parse.

Bonus:

The streaming process is as follows:

- The host uses

rtmpto push the stream, and then pushes it to the cdn. The cdn supports the audience to usehttp-flv,hls,rtmpthree ways to pull the stream. The common live streaming app useshttp-flv. - These protocols are like containers, they carry the packaging,

rtmpandhttp-flvcarryflv,hlscarriesm3u8andts.m3u8,tsinside are audio and video.

The data size will be TS > MP4 > FLV.

The answer of the question #

A video file size is 10 M, the playback duration is 2 minutes 21 seconds, a 10 Mbps network can shared by how many people?

It bit rate is 10 * 1024 * 1024 * 8 / (2 * 60 + 21) = 594936.73759 bps

10* 1024 * 1024 / 594936.73759 = 17.215 people

So the answer is 17 people.

Recommend the bit rate of the video:

bitrate = w * h * fps * factor(the streaming on phone is 0.08)

eg. 720 X 480 25fps the recommend bit rate is 675Kb/s ( 720 * 480 * 25 * 0.08 )/1024 = 675Kb/s ,so the 100M bandwidth can shared by 100/0.675≈148 people.

How about the device? #

For the more info about device, such as nit, ppi, refresh rate, etc. I will write a new article about it.

Reference #

https://tagarno.com/blog/1080p-vs-1080-on-digital-microscopes/

https://www-file.huawei.com/-/media/corporate/pdf/ilab/30-cn.pdf

https://netflixtechblog.com/per-title-encode-optimization-7e99442b62a2

https://netflixtechblog.com/optimized-shot-based-encodes-for-4k-now-streaming-47b516b10bbb

https://netflixtechblog.com/optimized-shot-based-encodes-now-streaming-4b9464204830

https://en.wikipedia.org/wiki/Video_Multimethod_Assessment_Fusion

https://www.zhihu.com/question/265520537

https://github.com/CharonChui/AndroidNote/tree/master/VideoDevelopment

https://www.easemob.com/news/3614

http://www.52im.net/thread-235-1-1.html

If you find this blog useful and want to support my blog, need my skill for something, or have a coffee chat with me, feel free to: